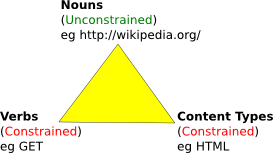

The REST architectural style is not well named, and is often not clearly understood. Of course it features of statelessness and layering that lead to high performance and scalable technical solutions, but most people can take these features for granted. Most people just want to know how to design their software according to the REST style. REST is about transferring documents between resources using a limited vocabulary of methods and a limited number of document types. Each resource demarcates a subset of an application's state, and becomes a handle by which other applications can interact with that state.

REST stands for REpresentational State Transfer. Unfortunately, this doesn't mean much to anyone not already caught up in the jargon of REST. Let me translate: REST stands for information exchange through documents. Representation is the REST term for what we commonly call a document. It encodes some useful information into a widely-understood document format such as HTML. The rest of the REST acronym is just saying that the exchange of these documents using a limited set of methods are the sole means of communciation between clients and servers in the architecture. The acronym doesn't really spell out many of the REST architectural constraints, and doesn't highlight the importance of resources in the architecture at all. Perhaps this has contributed to the popularity of the Resource Oritented Architecture TLA.

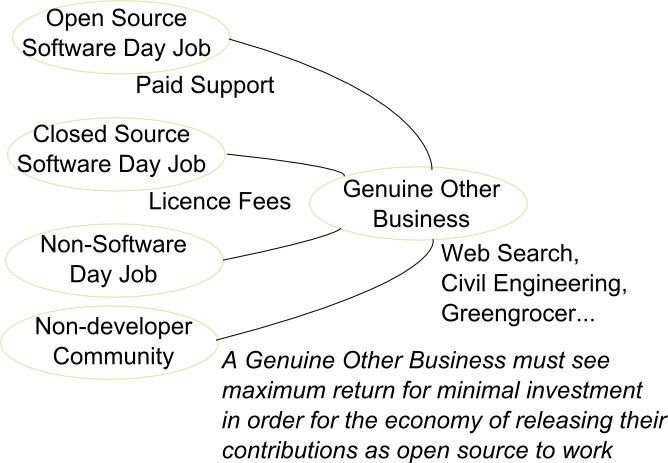

I think that the REST moniker came about at a time when developers thought they could just make distributed software like regular software with transparent remote procedure calls governed by defined Interface Description Language files. The rise of Service-Oriented Architecture as a notion separate to the Web Services stack seems to be helping developers see their IDLs as protocols that need careful attention as systems evolve. Developers are more comfortable with the concept that messages are the way systems interact with each other. In this environment, the focus of the REST acronym no long cuts as deeply. Now, REST's revolutionary facet is in the transition from object-oriented interface design to resource-oriented interface design.

REST's underlying assumption in this area is that it must be conceivably possible for a single entity in an internet-sized network to understand every message exchange. This means that it understands what a client is requesting every time a request is made, and understands what the server's response means every time a response is returned. It understands all other forms of message exchange also. This concept is REST's uniform interface, and much of practical REST implementation is bound up in understanding how REST allows this uniform interface to evolve and change. The uniform interface is central to REST's ability to usefully introduce intermeditaries, to provide web browsers, and in general to keep complexity at a level below the size of the network instead of greater than the size of the network. By keeping complexity low, REST aims to allow network effects to flourish rather than be cancelled out.

At first it seems like we are just talking about semantics. For instance, isn't it possible to understand every exchange over a Web-services stack? All we would have to do is understand all of the IDL files governing the system, and we would be able to understand all message exchanges.

The fault in this thinking is in assuming that the number of IDL files will grow at a rate slower than the size of the network. We may be able to define a few IDL files that are useful to a large number of users, but we will always have special needs. The popular IDL files are likely to need a number of "insert some data here" slots in order to be widely applicable, so an architectural style will need to be built up around any popular IDL to ensure that the information it allows through evolves in a way that maintains the interface's usefulness.

REST contends that one IDL file should be enough to rule them all. It contends that a family is not needed, just one definition that is allowed to evolve in a way consistent with the REST style. It says that the file should be allowed to define methods that everyone understands, and applies a consistent meaning to. It then says that most methods should have an "insert data here" slot, with a label indicating what kind of data is being transferred.

REST anchors the interface in a globally defined exchange of documents, the format of which are to be defined outside of the main interface definition. Objects that implement the generalised IDL file are called resources. It's as simple as that. These resources are typically a facade behind which more application-specific objects live. A single object may be refered to by several resources, or a particular resource operation may map to several object method invocations.

In REST, a URL is like an SQL select statement. It chooses a subset of the total application's data to operate on. Consider a URL like this: <http://example.com/SELECT%20*%20FROM%20MyTable>. A HTTP GET to that resource might retrieve the MyTable contents, and some sort of CSV document format might be a minimal mapping to retrieve data. However, the data could be returned in other formats such as HTML or something more structured such as atom. It would depend on the data being offered as to which formats the data could be encoded into, but each available format should return essentially the same data. Different document formats will exist to preserve varying levels of semantics associated with the source data.

A HTTP PUT request is where the old CRUD analogy falls down. In classic CRUD you would have a separate UPDATE statement with its own select. In REST, the same resource selects the same state. A client would send a HTTP PUT request to that same SELECT URL to replace the MyTable content with different content, or a HTTP POST request to add new records to MyTable. HTTP DELETE would purge the table.

If the resource had only selected a subset of the table, the operations would only affect the subset. If the resource had joined multiple tables, the operations would affect the data selected by the join. Analogues in the object-oriented world are similar. A resource may demarcate or select part of an object, a whole object, a set of object, or parts of a set of objects. Whichever way you slice it, the operations mean the same thing. GET me the resource's data in a particular document format. PUT this data in a compabile document format, replacing the data you had previously. POST this data in a compatible document format, adding it to the data you had previously. DELETE the data your resource demarcates.

Object-Orientation focuses on variation in method and parameter lists in exposing objects to the network. REST focuses on variation in data in exposing resources with consistent methods and document types to the network.

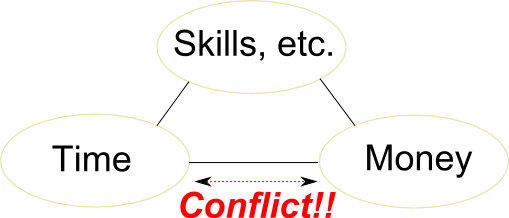

REST is designed to reduce the artificial complexity in communication. However there is still natural complexity to conquer. The IDL file with its limited set of methods must be dealt with cleanly, and allowed to evolve to suit different environments and requirements. There may still be areas which the uniform interface doesn't address due to performance problems, and specialised interfaces may still be required for fringe applications. Probably the biggest source of natural complexity, however, is the standardisation of document types.

Document types are closely related to ontology and to langauge. Wherever there is someone with a concept that noone else has there will be a name to match. Wherever there is a name, there is a document that contains that name. What I am talking about is the foundation of the semantic web. That foundation is formed of people, things, convention, agreement, and all of the social things that programmers have felt they could get away from in the past. The rise of internet-scale programming environments changes the playing field. We must agree more than we ever did, and yet we will still be left with local conventions for cultural and social reasons. Wherever there is a sub-group of people there will be documents specific to that group.

In this semantic web environment, REST cannot hope to achieve true uniformity. The best that can be achieved is a kind of uniformity rating. Ok, we are both using XML. That's good. We are both speaking atom. That's great. We still have conventions specific to our blogs, and no generic browser can hope to understand them... only render them. Ok, we are both using XML. We are both talking about trains. However, we can't agree on what the train's braking curve looks like.

REST will always break down at the point where local conventions cannot be overcome by global agreement. This is a natural limitation of society. In this environment the goal of REST document definition is to allow those areas which we do agree on to be communicated while local conventions can fail to be understood without confusing either side as to what is happening.

REST is an ideal that can be applied to specific problem domains in a way that reduces the overal communication complexity in a large network. The goal of REST and post-REST styles should be to ensure that agreement is forged on important things, while variation in local conventions are tolerated. Generic software should be able to understand as much as is required to make business processes work, and where local variation is essential specific software introduced. The goal is to create an environment under which technology is not what holds us back from a true read/write semantic web.

Benjamin